Meta has officially launched its AI-powered voice translation feature on its social media platforms – Facebook and Instagram – for users around the world. The company announced the rollout, marking a significant step in making content more accessible across languages and cultures.

How the New Translation Feature Works

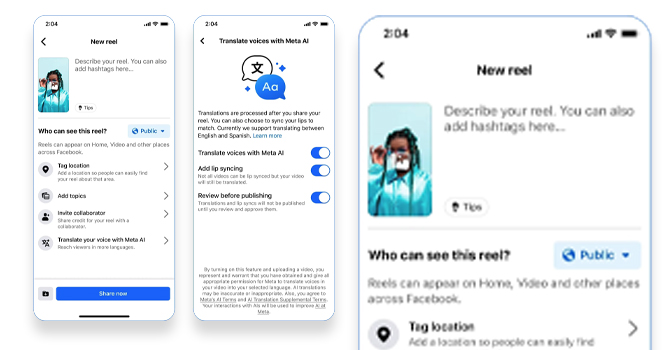

The new feature allows creators to translate their content into other languages so that it can be enjoyed by a wider audience. It uses AI to reproduce the creator’s own voice in the translated version, making it sound more authentic and natural. Along with this, creators can choose to activate a lip-sync option that aligns the translated voice with their lip movements, providing a smoother viewing experience.

From Testing to Global Rollout

This feature was first introduced at Meta’s Connect developer conference last year, where it was tested with automatic translations of creators’ voices in reels. At launch, translations are available between English and Spanish, with more languages expected to be added in the near future.

How Creators Can Use the Tool

To use the feature, creators can select “Translate your voice with Meta AI” before publishing their reel. They can then decide whether to include lip-syncing. The translated content is automatically generated once the reel is published. Importantly, creators can preview the translation and lip-sync before posting and have the option to disable either setting at any time.

Who Gets Access First

Meta has limited access at launch to Facebook creators with 1,000 or more followers and all public Instagram accounts in regions where Meta AI is supported. Viewers will see a note on translated reels indicating that Meta AI was used. Those who prefer not to view translations in certain languages can opt out in the settings menu.

New Insights for Creators

In addition to translations, Meta is introducing new insights for creators. They can now view metrics showing how many people are engaging with their content by language. This will help creators better understand the reach of their work as the platform expands to support more languages.

Meta has also provided recommendations for best use. Creators should face forward, speak clearly, and avoid background noise. The feature supports up to two speakers, but requires that they do not talk over one another.

Expanding Beyond AI with Dubbed Tracks

Interestingly, Facebook creators will also be able to upload up to 20 of their own dubbed audio tracks in the Meta Business Suite. This allows them to expand their audience further, beyond just English or Spanish-speaking viewers. These tracks can be added before or after publishing a reel, unlike the automatic AI translations.

What’s Next for Meta’s AI Strategy

Meta has not yet announced which languages will be supported next, but the company believes the feature will help creators build larger global audiences. Adam Mosseri, head of Instagram, stated that the goal is to help creators connect with people across cultural and linguistic barriers, ultimately growing their reach and impact.

This launch comes at a time when Meta is restructuring its AI division to focus on research, superintelligence, products, and infrastructure, showing just how central AI has become to its long-term strategy.

- OpenAI Unveils ChatGPT Atlas: The AI Browser - October 22, 2025

- The New Dr. Google is in: Here’s How to Use it Wisely - October 15, 2025

- Leadership in the AI Era: 5 Skills That Drive Real Impact - October 8, 2025